RPC: now with infinite scroll

Author

Urvi Savla

Publishing date

Ever wished you could access any Stellar ledger, no matter how old, directly through RPC? Until now, that wasn’t possible due to RPC’s retention policy, which limited access to recent data only.

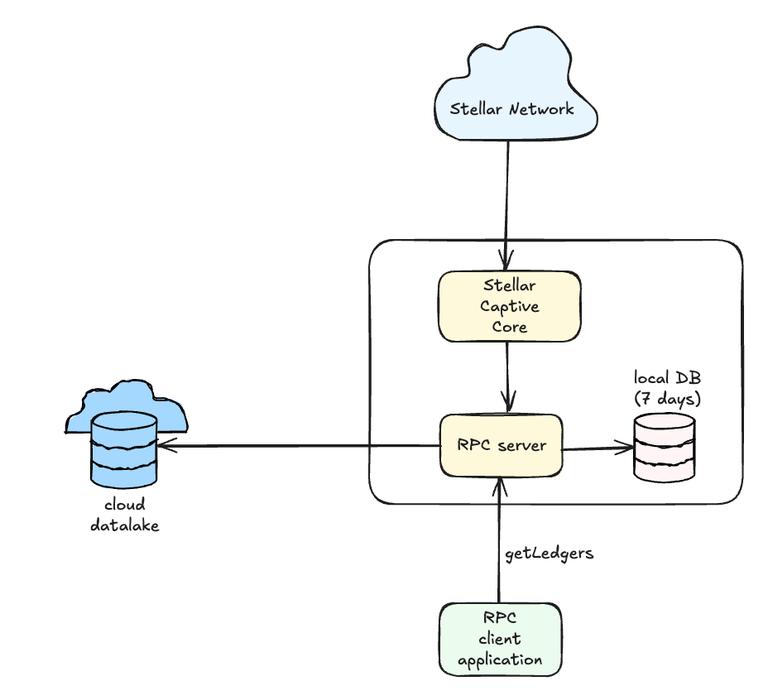

Good news! We are excited to introduce a new feature that changes that: RPC integration with the ledger data lake. Fulfilling the vision outlined in our earlier proof of concept, this integration makes it possible to retrieve any historical ledger, from genesis to the latest, directly through your RPC queries.

What’s with the 7-day retention?

Each new RPC instance starts with an empty database and begins ingesting ledger data from the current tip of the network. It continues to ingest new ledgers in real time, retaining only the most recent 7 days of data. Older data is automatically pruned to maintain this window.

The 7-day retention period is both the default and the recommended setting. It helps keep the database size manageable and improves processing speed. This approach works well for most systems, which typically only require access to recent ledger data.

What is a ledger data lake?

A cloud-based object store which contains the metadata of each Stellar ledger from genesis to the present. It follows a standardized format defined by SEP-0054, ensuring consistency across implementations.

How do I get access to the data lake?

You have two options for accessing a Stellar data lake:

1. Publicly hosted data lakesYou can make use of publicly hosted data lakes provided by third-party providers, making it easy to get started with minimal setup. This is the simplest option if you just need quick, read-only access to historical data.

One such publicly accessible ledger data lake is provided via the AWS Open Data program at s3://aws-public-blockchain/v1.1/stellar/ledgers/pubnet.

2. Host your own data lakeFor greater control, availability, or compliance needs, you can host your own ledger data lake. We’ve built Galexie, our purpose-built tool for managing ledger data.

With Galexie, you can:

- Bootstrap your own data lake

- Keep it continuously updated as new ledgers are published

To get started, check out the Galexie Admin Guide and blog post. Currently, Galexie supports creating a data lake on AWS S3 and Google Cloud Storage (GCS).

Configuring RPC integration

Setting up your RPC node to use the data lake is a straightforward configuration change. It involves specifying your storage type (GCS or S3) and providing the bucket path.

For full configuration details, refer to the RPC admin guide . You can also find a community-maintained guide on this gist for a step-by-step example.

Fetching Ledgers

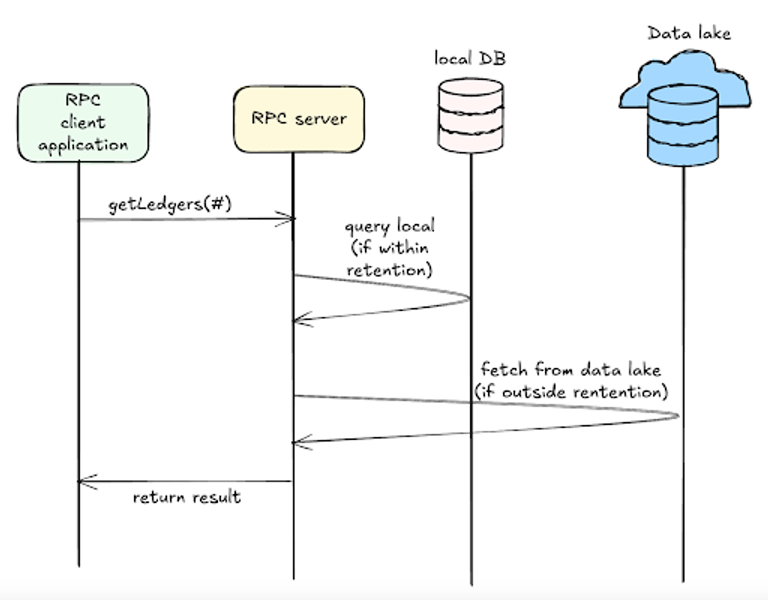

To kick things off, the getLedgers endpoint will be the first and only endpoint to support this new functionality. This means you can now call the getLedgers endpoint with any ledger sequence number, and the API will locate the ledger and return it, provided your RPC is backed by a data lake containing that historical data.

Note that there is no change to how we ingest new ledgers – new ledgers will continue to be ingested directly from Stellar Core, and not via the data lake. All other existing RPC endpoints will continue to function as before, serving data within the local retention window.

Performance considerations

Fetching data from a cloud-based data lake is inherently slower than accessing locally stored data. To accommodate this, the default server timeout for the getLedgers endpoint has been increased. In our testing, no timeouts were observed under normal conditions.

Actual performance may still vary depending on network conditions and your data lake provider. You can configure the server timeout based on your conditions.

Cost implications

The data lake stores data efficiently in a compressed format. As of mid 2025, the complete data lake is approximately 3.8TB and grows by roughly 0.5TB per year.

Here’s a rough estimate of the costs involved in hosting your own data lake (actual costs may vary by cloud provider and plan):

- Bootstrap cost: One-time cost of around USD $600 to create and backfill the data lake.

- Monthly ongoing costs: ~$160 total

- $60/month for compute (to continuously export new ledgers)

- $100/month for storage

- Egress costs may apply depending on usage

🚀 Ready to access the full Stellar ledger history?

- Configure your RPC instance to tap into the ledger data lake and gain access to complete historical ledger data.

- Refer to these documents for more information:

If you have questions or ideas to improve the integration, open an issue on our GitHub repository or discuss with the community on Discord.