Cdp and RPC? more like ez and pz.

Author

George Kudrayvtsev

Publishing date

So my teammates on the Platform Team recently released and announced the Composable Data Platform and Galexie. I wasn't really involved in the planning nor development of this project, but the implications of the flexibility of CDP was so promising that I had to try it out. They’ve been hyping it up in the Discord Developer Meeting and in a recent blog post and it sounded a liiiittle too good to be true.

The cynic in me had to give it a go, and even though I could DM my coworkers for help, I was determined to do that as little as possible. This post is a brief foray into my experimentation with using CDP to add backfilling support to Stellar RPC.

Spoiler Alert: It really was that easy.

Motivation

If you've been tinkering around with the Stellar smart contract platform, you've probably also tried running Stellar RPC so that you can run everything locally (unless you're using one of our ecosystem's RPC providers!). You've probably also encountered one of its intentional design decisions: it's a live gateway that is not very concerned with historical data. This is what makes it so lightweight and easy to run. It does provide small windows of history for transactions and events, but these are "forward looking": if you configure RPC to store 24 hours of ledger history, it won't spend a bunch of time trying to backfill the last 24 hours, but will rather just keep the next 24 hours in the database and then trim it on a rolling basis. The goal is to get you moving forward with your project as quickly as possible rather than Horizon's philosophy which is more centered around deep history management.

The advent of CDP, however, made me wonder if it would make it easy to incorporate backfill into RPC without having to put up with the slow replay and startup lag that backfilling directly from captive core's replay mechanism would incur.

TL;DR: half an hour and 80 lines of code later (half of which is just error handling thanks to Golang's pedantry), I could have RPC backfill its database with a day of history (around 28GB of data) in around 10 minutes.

Experimentation

First, we need a data store of ledger metadata. Admittedly, I cheated a bit on this part since the team had already exported everything to our GCS bucket and I didn't have to do any exporting myself. But once CDP takes off, I wouldn't be surprised to see ecosystem providers allow access to their data lakes to developers in a similar fashion to what we see with RPC. It's also important to remember that CDP is an "export a range once, ingest it everywhere" model (as opposed to Horizon which needs captive core to generate data on the fly and then subsequently ingest it every time for any range you want to backfill), so even if you decide to run galexie to build the data lake yourself, you'll only have to put up with the export process once, as opposed to every time.

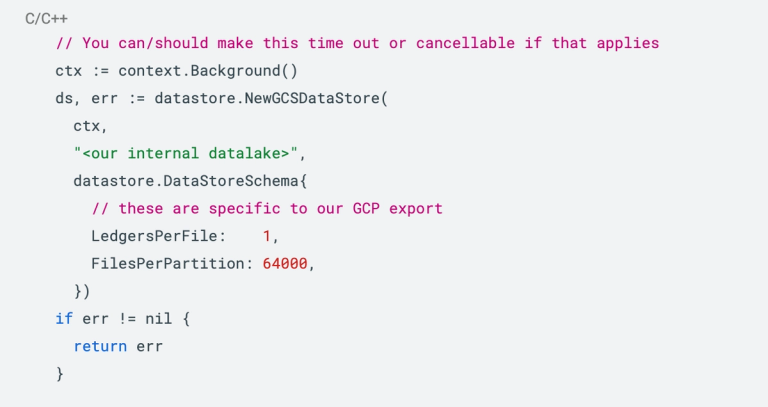

Connecting to the lake is easy:

Note: This step does require that your machine is configured with the gcloud CLI. Once you have authenticated yourself and your credential file is stored on disk (on my machine it lives in ~/.config/gcloud), it will automagically get picked up by the above connection code.

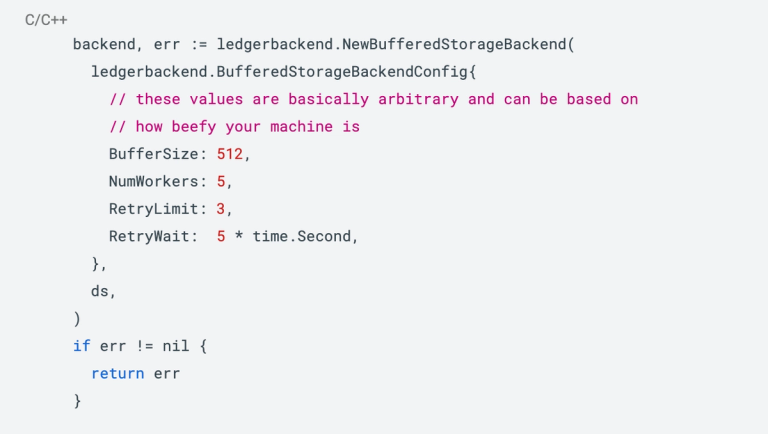

Second, we need to use the new BufferedStorageBackend in the ingest library which leverages the data lake to provide efficient, parallel readers to fetch ledgers:

Note: There’s some benchmarking the team did on the configuration values here that you might find useful depending on how you export your data.

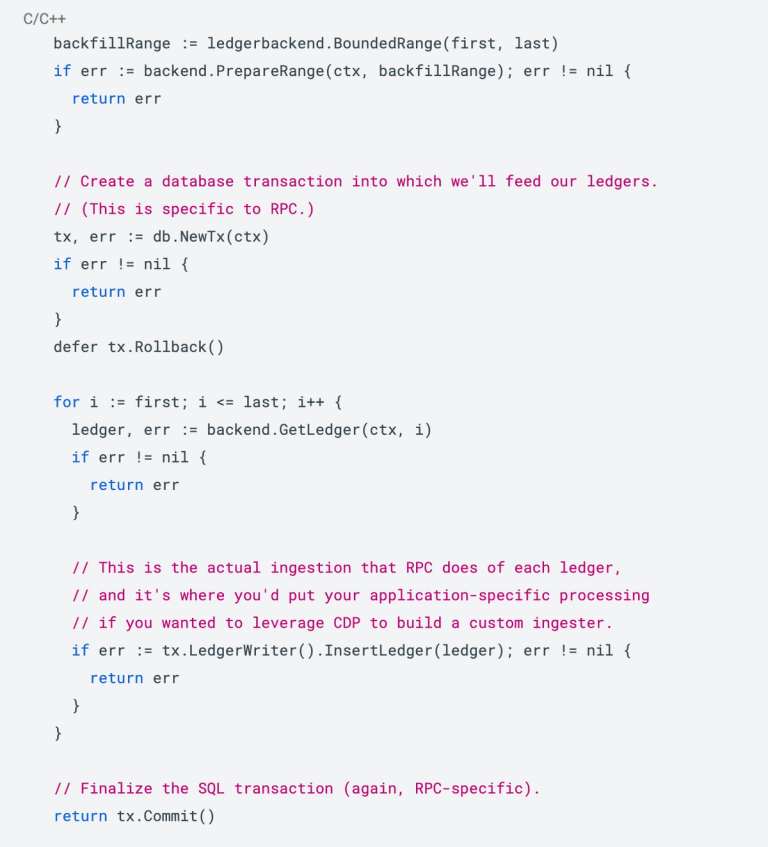

Finally, all we have to do is decide on the ledger range we want to fetch and backfill. I had to add some boring calculations to the RPC code that varied based on whether or not the database was empty that I won't replicate here, but you can just assume we now have the first/last variables representing the range we want to backfill. At that point, the actual backfill becomes trivial:

And that's all she wrote! There was some other stuff to finagle regarding database migrations—we want all migrations to "reoccur" in case of a backfill since e.g., transactions won't be ingested yet—but that’s orthogonal to the quick CDP tutorial here.

Results

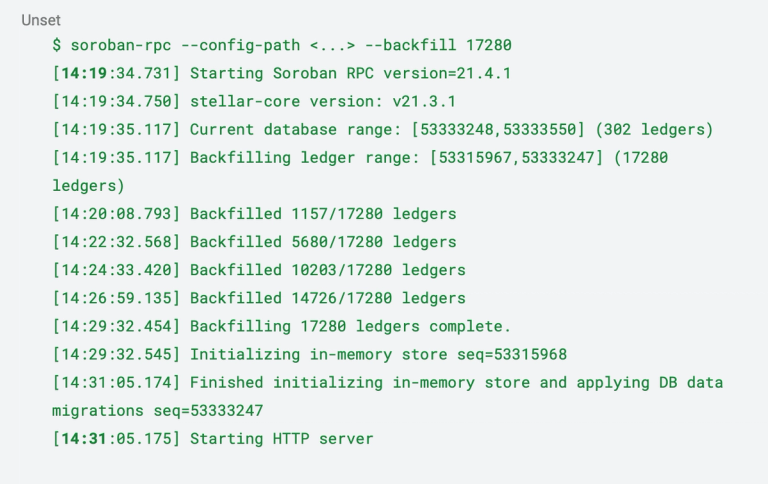

First, I ran the RPC service the "normal" way: catch up to the latest tip of the network and ingest a few ledgers. Then, I stopped it and asked it to backfill the last 17,280 ledgers, which is approximately a day's worth. On my machine (which admittedly is a big fat caveat), that process only took about 12 minutes:

If we had done this via captive core, catching it up to the network and then performing a replay of this ledger range, it would've taken upwards of an hour, if not more! Furthermore, a bunch of that time would've been spent just on the catchup phase, and repeated every time we wanted to backfill.

Ezpz

Whelp, they weren’t exaggerating: it really was that easy. It's unlikely that backfilling will make it into RPC anytime soon, but the fact that I could whip up a prototype in a single afternoon confirms the fact that CDP is just as powerful as my team claimed it was!

CDP’s flexibility means gathering insights about ledger data is fast and easy: you could track payments between accounts, watch DEX activity, index contract events and interactions, observe state archiving, or anything else you might be interested in. The ledger is now our oyster!

Want to try CDP out for yourself? Check out the galaxie documentation to build a data source and the sample code in this very post to use BufferedStorageBackend to build your own efficient and composable ingestion pipeline!