Hubble: now faster than light

Author

Simon Chow

Publishing date

Data

Hubble

Welcome to the latest installment in our series on the Composable Data Platform (CDP), the next generation data-access platform on Stellar. Today, we're excited to introduce our first case study: refactoring Hubble’s data movement framework, Stellar ETL, to use CDP.

Now that we have a basic understanding of CDP from the introduction and Galexie posts, we will dive into a real-world application, Stellar ETL, which has been refactored to use the full suite of benefits from CDP. By the end of the article you’ll see how fast, cheap, and easy-to-use CDP really is!

What are Hubble and Stellar ETL?

Hubble is an open-source, publicly available dataset that provides a complete historical record of the Stellar network. Stellar ETL is the application that Hubble uses to ingest Stellar network data. To learn more about Hubble and Stellar ETL check out our Developer Docs and blogpost.

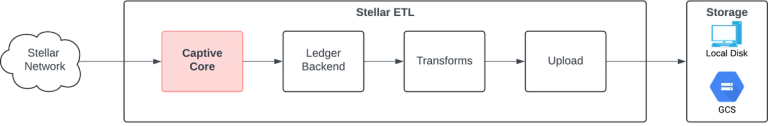

Original architecture overview

The original design of Stellar ETL tightly coupled data extraction and transformation into one application. This means that Stellar ETL instantiates a new Captive Core to extract data for every ledger range processed (highlighted in red). Ultimately, this works, but comes with many pain points:

- It’s slow: Captive Core takes 15+ minutes to start up

- It’s expensive: Captive Core needs its own resources adding to your compute cost

- It’s hard to use: you need to maintain a Captive Core instance on top of your application

It’s Slow: Are we there yet?

Captive Core takes a long time to start-up, roughly 15 minutes. This means you are wasting 15 minutes of time every single time you run Stellar ETL. Imagine all the time wasted if you want to continuously process Stellar network data in 30 minute batches. That is ~12 hours per day of Captive Core startup time! Not to mention the data you extract will be at least 15 minutes older relative to the current network ledger. This only gets worse if you imagine backfilling a large range of historical data. The single threaded nature of Stellar ETL means that backfilling the full history of the Stellar network data would take months, or incur prohibitive costs for additional expensive parallel workers.

It’s Expensive: Where’d all my money go?

Stellar ETL is a simple, lightweight application that transforms raw Stellar network data into a human readable format. This is like using grep and sed to search and format data into readable text. Would you really want your grep and sed to require being run on a 4 core 16 GB machine? Hopefully not. That behemoth of a machine is all for Captive Core. Captive Core accounts for the majority of Stellar ETL’s resource requirements and cost.

It’s Hard to Use: What do I do with my hands?

On top of maintaining Stellar ETL, we need to maintain an up-to-date Captive Core instance. That means maintaining an extra Captive Core configuration file as well as the Captive Core image itself. So you now need to be an expert in your application as well as the intricacies of Captive Core. Version updates to Captive Core and protocol upgrades require you to redeploy your application, even if there are no changes to your underlying code.

Another hot topic is debugging. Let’s say there was a bug in Stellar ETL that processed a defined ledger range incorrectly. To actually test the bug fix at this ledger range we would need to run Captive Core every single time we wanted to test the code change (again, that’s 15+ minutes of start up time per run). When the bug is fixed, correcting the bad data also requires a Captive Core instance.

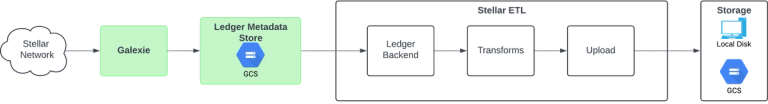

Replacing captive core with cdp

Clearly, the problem with Hubble's original architecture is Captive Core. So how do we fix this? By removing Captive Core and replacing it with CDP components!

CDP was very easy to drop-in and replace Captive Core. In essence, we decouple Captive Core from Stellar ETL by using Galexie and a new Ledger Backend. As a recap, Galaxie is a simple, lightweight application that bundles Stellar network data, processes it and writes it to an external data store. We are then able to use this external data store in place of Captive Core. The data store becomes the source of record for Stellar network data. The Ledger Backend which interfaces with the Stellar network is largely the same, it just interfaces with the Ledger Metadata Store in GCS instead of the Captive Core data stream.

Was it worth it?

Let’s compare the new version of Stellar ETL to the old version:

- It’s faster: access Stellar network data in seconds, not minutes

- It’s cheaper: runs on a tiny size machine

- It’s easy to use: maintain your application code, nothing more

It’s Faster: I have time to play Pickleball now?

Without the need to run a Captive Core instance, we are now limited only by the performance of our application code. Instead of 15+ minutes to ingest a defined ledger range of data, it now takes under 1 minute. Running Stellar ETL at the same 30 minute batch interval takes only 48 minutes per day VS 12 hours per day. That’s a 93% time reduction! We can now also run Stellar ETL more frequently, even as micro-batches, so that Hubble contains near real-time data.

Backfilling is now actually viable. Backfilling the full history of Stellar network data takes under 4 days vs months. Accessing preprocessed data from a Ledger Metadata Store is significantly faster than iteratively pulling data from Captive Core. Plus you can parallelize reading data from a data store. You cannot parallelize reading from a single Captive Core instance.

It’s Cheaper: What do I do with all this money?

Without Captive Core, we have decreased our costs by ~90%. This is because we can now appropriately size our machines to fit our application. Originally our kubernetes pods needed to be run with 3.5 vCPU and 20 GiB memory. Now we only need 0.5 vCPU and 1 GiB memory. This comes out to ~$10/month vs ~$100/month in resource costs.

It’s Easy to Use: Yes or yes?

Without Captive Core, the maintenance burden has been decoupled. There’s no need to update the version of your application just because Captive Core had an unrelated version update. There’s no need to maintain a separate configuration file that is completely unrelated to your application. All this leads to less code, less complexity while keeping the same easy-to-use Ledger Backend interface.

Speaking of debugging, it’s very easy to rerun things locally now. Iterative debugging and development are no longer bottlenecked by long start times. Integration tests are now actually viable. Development is only limited by your imagination!

What’s next?

We’re working on showing you how other platform products, like Horizon and Stellar RPC, use CDP. Keep an eye out for those blogs in the near future!

Right now, our team is creating a library that will parse the raw Ledger Metadata to a more user-friendly format. The library will include an intuitive event streaming flow which consists of a public ledger producer and well-defined data transformations. The public ledger producer will automatically stream Ledger Metadata from CDP. The data transformations, or processors, will take the stream of data and parse it to a user friendly format.

Our aim is to make application development faster and easier because you’ll be able to stream, transform, and filter Stellar network data as you see fit instead of getting into the nitty gritty raw Ledger Metadata.

The Newsletters

The email you actually want to read

Hear it from us first. Sign up to get the real-time scoop on Stellar ecosystem news, functionalities, and resources.