Horizon: accelerating reingestion performance with cdp

Author

Urvi Savla

Publishing date

This blog post is the next in the Composable Data Platform (CDP) blog post series. The previous blog post, Hubble: Now Faster than Light, went into the details of re-architecture of stellar-ETL using CDP and its benefits. This post explains how we made Horizon reingestion 9x faster using CDP components.

Background

What is reingestion

The size of Horizon data is enormous and it is impractical for each instance to store the entire network history. Most Horizon instances, including those hosted by the Stellar Development Foundation (SDF), are configured with a specific retention window–we recommend 30 days– to manage data size.

However, there are scenarios where you may want to retrieve and process older ledger data outside this retention window. Horizon supports this through a process called reingestion, which fetches and reprocesses historical Stellar ledger data.

There are several key situations where a Horizon user might need to perform reingestion:

- Setting up a new server: For users that want to backfill data within their retention window, reingestion can be used to hydrate their database with older ledger data..

- On an existing instance: Reingestion can be used to fetch data outside the retention window, such as for auditing or reconciliation purposes.

After a pause in operations: If a Horizon instance has been inactive for a period, reingestion may be necessary to bring it up to date, as it can be faster and more efficient than using live ingestion to catch up to the current network state.

Problem Statement

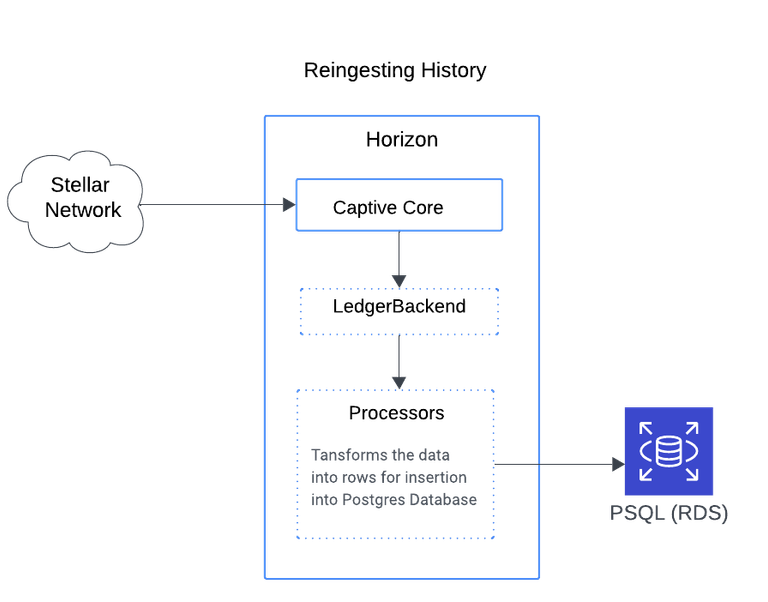

Current architecture

Reingestion is both time-consuming and resource-intensive, taking several days to process just one month of recent ledger history and months to reprocess the entire history.

The process is demanding because it relies on Captive Core to retrieve and process ledger data.

Solution

New architecture

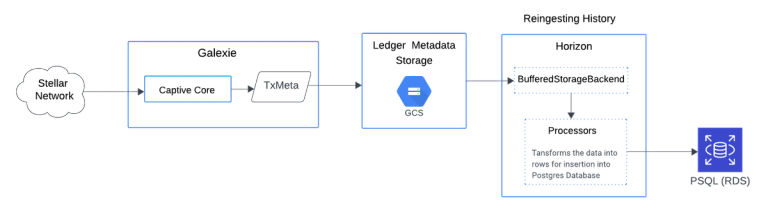

Central to CDP is the data lake of precomputed ledger metadata. For details on how to create this data lake using Galaxie, refer to the Introducing Galexie: Efficiently Extract and Store Stellar Data blog post.

Ingesting data from the data lake is significantly faster. What would normally take months to reingest now takes just a few days.

This speed improvement is due to two main factors:

- No Captive Core startup time (no need to download history archives to build local state).

- No need to replay ledgers to compute ledger metadata.

Ledger metadata is readily available for direct download from Google Cloud Storage (GCS). Each file is compressed, making it very small, so minimal network bandwidth is required.

To accommodate the new architecture, the Horizon db reingest command now supports reingestion from the datastore. You invoke it similarly to how you would for Captive Core, but with additional configuration for the datastore, such as specifying the bucket address and data schema. For command and configuration details on using CDP for reingestion, refer to the reingestion guide here.

Benchmarking

Captive core vs. cdp

To benchmark the reingestion performance of Captive Core and CDP, we conducted tests under the following hardware setup:

Hardware Specifications

- EC2 Instance (m5.4xlarge) – for running Horizon:

- 16 vCPUs

- 64 GB RAM

- EBS-Only Storage

- Network Performance: Up to 10 Gbps

- Baseline IOPS: 4,750

- RDS Instance (db.r5.4xlarge) - for running PostgreSQL 12:

- 16 vCPUs

- 128 GB RAM

- 15 TB of storage

Parallel Reingestion

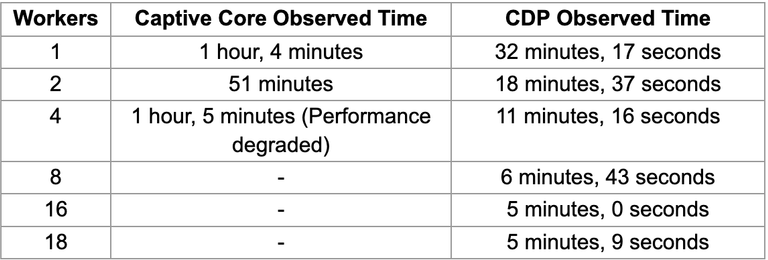

Horizon supports parallel reingestion, meaning the reingestion range is divided into subranges and ingested simultaneously. We wanted to assess the level of parallelization achievable with both methods (Captive Core and CDP). So we re-ingested 10,000 ledgers using both methods with varying levels of parallelization and these are the results:

Captive Core: Performance was constrained by disk I/O and showed diminishing returns with more than four workers.

CDP: In contrast, CDP achieved much better parallelization, with optimal results using 16 workers.

For details on configuring parallel ingestion, refer to the guide on parallel ingestion workers.

Reingesting Full History

Using the best parallel setup, we estimated the time to reingest 10,000 ledgers. However, older ledgers are less dense than recent ones, so less time is required to reingest older data. To confirm this, we sampled 10,000 ledgers from each year since the inception of the Stellar network and extrapolated the time required to reingest the entire history.

The results show that reingestion using Captive Core is projected to take approximately 66 days, while the CDP (with precomputed ledger metadata) is expected to take around 7 days.

Performance Comparison: Captive Core vs. CDP:

In this evaluation, Captive Core ran with 2 parallel workers, while CDP ran with 16 parallel workers.

Conclusion

Cdp is faster and efficient

With CDP, Horizon reingestion is now up to 9x faster, cutting down processing times by over 85%. However, even with these improvements, using Horizon to serve full historical data requires massive amounts of storage—around 40 TB and growing fast. In most cases, building your own applications using CDP offers a better path forward.

CDP’s precomputed ledger metadata allows you to build a much smaller custom dataset. And if you’re looking to reingest large amounts of data to populate your new dataset, CDP provides your application with the huge performance benefits, making it ideal for creating efficient, focused applications.

This makes CDP a game-changer for anyone wanting to build flexible, scalable applications beyond Horizon. We encourage you to explore all that CDP offers for your own data needs!

See it in action yourself.

The Newsletters

The email you actually want to read

Hear it from us first. Sign up to get the real-time scoop on Stellar ecosystem news, functionalities, and resources.