Blog Article

A New Sun on the Horizon

Author

George Kudrayvtsev

Publishing date

Horizon

API

With the release of Horizon 2.0, we’ve introduced a new way to run the infrastructure that powers the Stellar network. This paradigm shift enables large organizations and small developers alike to deploy Horizon with fewer resources, under looser constraints, and with far more flexibility than ever before.

In the past, you needed to run a Stellar Core node to deploy Horizon, which burdened you with heavy disk space requirements and careful configuration necessitated by Stellar Core. The old architecture's complexity didn't make sense for a lot of use-cases, and it wasn't negotiable: batteries were included whether you needed them or not.

Now, you can choose a setup that fits your use case. Instead of following a universal blueprint, you first answer an important question: What do you want to do? Do you want to run a validator? Or do you just want to run Horizon?

- Running a validator lets you: participate in consensus and improve network health and decentralization, take advantage of Stellar’s issuer enforced finality feature, and vote on fundamental network changes (like inflation or fee changes).

- Running Horizon lets you: find out what happened on the blockchain in the past, find out the current state of the world (e.g. account balances), track new events that you care about, and submit transactions.

If you decide you want to run a validator, then you should check out the documentation on configuring and deploying a Stellar Core node, whereas if you decide you just need Horizon, then you can keep it simple and read about deploying an API server.

Out with the old…

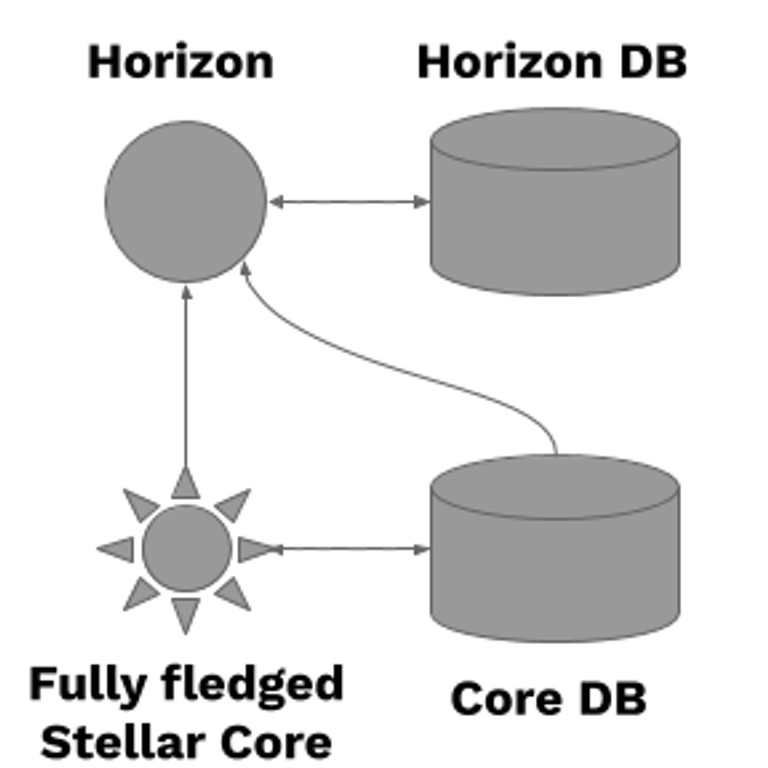

Here's what the old architecture looked like:

Some of the lowlights of this architecture should be apparent to anyone who deployed this architecture in the past:

- When setting up a Stellar Core node, a safe configuration is essential and not an easy thing to create. There are many details to remember (such as home domains, database URIs, and other one-off parameters) even if they aren’t your main focus.

- Reingestion was tedious: when SDF added new features to Horizon, sometimes organizations would need to reingest large swaths of history to be able to take advantage of them. It takes time for Horizon and Core to process ledgers into “transaction metadata” (that is, time-series data like balance history, account effects, etc.): a full reingestion of the pubnet history could take weeks, even on a powerful machine.

- Because of this complex process, Stellar Core had to hold on to hundreds of gigabytes of data for Horizon to facilitate ingestion. In turn, Horizon stores several terabytes of this metadata for querying purposes. Obviously, losing either of these to corruption or other mishaps can lead to a lot of downtime as these databases are recreated: Stellar Core would take a while to catch up to the current ledger from scratch, and Horizon would take even more time to reingest the entire network’s history again.

- Scaling was difficult: because Horizon and Stellar Core were tightly coupled, if you wanted more Horizon instances, you needed more Core instances. This made it harder to build out a serious production cluster.

- Naturally, high processing requirements and large databases required higher system requirements for servers: more disk space, more processing power, etc.

...In with the New

All of these limitations have been resolved with the introduction of Horizon 2.0. Fortunately, that was then, and this is now:

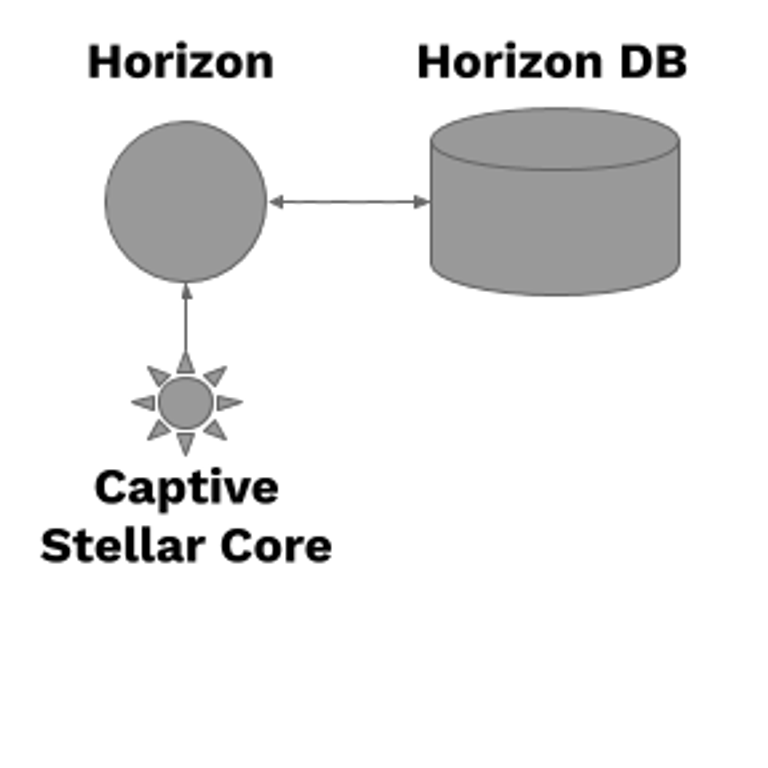

The beauty of the new, modular architecture is that it presents simpler configurations that can adapt to all kinds of use-cases:

- With an optimized version of Stellar Core (called Captive Core) that can process ledgers entirely in-memory and communicate over high-bandwidth OS mechanisms (pipes and sockets), there’s no separate Core database to maintain anymore.

- Ingestion is lightning fast: what used to take weeks even on high-end hardware can now be completed in just over a day. This is yet another improvement over previous ingestion performance enhancements. That means losing a Horizon database to corruption or needing to reingest the full history after an upgrade is no longer a big deal.

- Since Horizon knows exactly what it needs from Core and can manage the lifetime of its instance entirely, it actually generates configuration stubs for most of the necessary fields, letting you provide the bare minimum and focus on your use-case.

- Scaling a production Horizon 2.0 deployment is much easier with Captive Core. Each piece can be scaled independently: you can have any number of instances catered towards parallel ingestion, transaction submission, or simply handling requests. Since the last point is a read-only operation, request-serving instances are entirely decoupled from the database instances, allowing the latter to be scaled and replicated on their own.

- Further still, while transaction submission can still be done through a standalone Stellar Core instance, bundling Captive Core to each Horizon node instead adds a lot of resilience; there’s a lower risk of node failures taking down a cluster.

While deploying a full Stellar Core node can still be an involved process, the introduction of Captive Stellar Core lets you focus almost entirely on deploying Horizon itself, letting you participate in the network without bearing the full responsibility of consensus management.

Flexibility

Unlike selecting a starter Pokémon, changing your mind about your architecture is not difficult after the fact. If you suddenly find yourself vested in the decentralization of the network and want to run a validator, the standalone Stellar Core instance can be deployed independently while your Captive Core instance continues to work with Horizon. The inverse is also true: if you are running a validator and decide running Horizon is all you care about, all it takes is a bit of reconfiguration to introduce the Captive Core instance and enjoy the performance and architectural benefits it provides.

System Requirements

As already mentioned, the system requirements differ from previous architectural versions largely due to the changes in how Stellar Core interacts with Horizon. Specifically, the in-memory database requires around 3 GiB of RAM, and the disk space requirements have dropped significantly (again, by hundreds of gigabytes).

As always, a more-powerful machine results in faster ingestion, but it’s worth noting that after an initial ingestion, staying “online” is much less resource-intensive. Furthermore, it’s rare that an organization actually needs the full ledger history, and can thus get away with ingesting much smaller ranges of transaction metadata.

Horizon 2.0 introduces massive performance and architecture benefits that makes running Stellar infrastructure easier than ever:

- The optimized Captive Stellar Core can process transactions in-memory, meaning…

- Disk space requirements have been vastly reduced (by 100s of GBs)!

- Ingestion is now orders of magnitude faster (weeks -> a day), meaning…

- The Horizon database is no longer precious: it can be rebuilt quickly!

- There’s no separate Stellar Core instance to manage, meaning…

- No more database requirements for Stellar Core!

Excited about these changes? Check out the migration guide to get started today!